Building a Portable FastAPI Backend for AWS Lambda and ECS Using Terraform

In the previous post, we explored how to deploy a FastAPI application on AWS Lambda using an ASGI adapter. This is a great option for early-stage projects: it requires zero infrastructure management, supports rapid iteration, and scales automatically.

But as your application matures, Lambda’s trade-offs can become limiting:

- Cost scaling with consistent traffic

- Compute/memory coupling and lack of vertical scaling

- Package size limits and cold starts

That’s why many teams adopt a container-based workflow that can run on both Lambda (via container images) and ECS Fargate. With a little planning, you can build once and deploy to either platform with minimal friction.

For demonstration purposes, this setup uses an ALB to route traffic to both Lambda and ECS.

The full project code is available here.

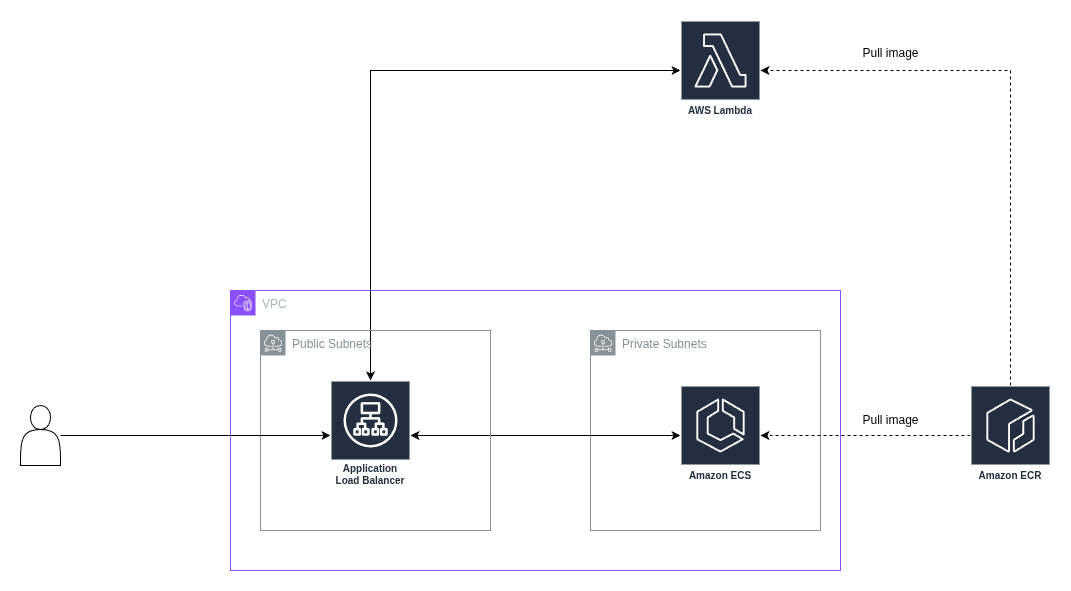

Demo Architecture

In this setup, all incoming user requests are directed to an Application Load Balancer (ALB), which is deployed in the public subnet of a VPC. The ALB listens on port 80 and is responsible for routing traffic to backend targets. The ALB is configured with two target groups—one for ECS and one for Lambda. Requests are evenly distributed between two targets.

The two backend targets consist of an AWS Lambda function and an ECS Fargate service. Both serve the same FastAPI application via Docker containers. The ECS service runs in private subnets, ensuring it’s not directly accessible from the internet, while the Lambda function runs fully managed in AWS’s serverless environment.

Project Structure

The goal is to deploy the same FastAPI app to both AWS Lambda and ECS using Docker and Terraform. Below is the example directory structure.

|

|

FastAPI Backend

|

|

This is a minimal FastAPI application that exposes a single route at the root path (/). When accessed, it returns a string response indicating the environment it’s running in. This value is derived from the EXECUTION_ENVIRONMENT environment variable, which is set in the Dockerfile depending on the deployment target—either “lambda” or “ecs”.

The app is wrapped using Mangum, a Python adapter that makes it compatible with AWS Lambda. Mangum translates between the AWS Lambda proxy integration format and the ASGI protocol that FastAPI uses. This makes it possible to run the same ASGI-based app in a serverless environment without changing the application code.

Although the Mangum handler is required for Lambda, the app itself is compatible with ECS as well. In that environment, the app is served using a traditional ASGI server like Uvicorn. This approach enables you to maintain a single, unified codebase that works seamlessly across multiple execution environments.

Dockerfile for Lambda

|

|

This Dockerfile uses a multi-stage build to produce a container image optimized for AWS Lambda. Multi-stage builds help keep the final image size small by separating the dependency installation process from the runtime environment.

The first stage pulls the uv binary from the official image. This tool is used later to install the Python dependencies defined in the pyproject.toml file.

The second stage performs the build. It uses AWS’s official Python 3.13 base image for Lambda and sets several environment variables to improve performance and determinism. The application source code is copied into the image and dependencies are exported with uv export, then installed into the directory ${LAMBDA_TASK_ROOT}—this is where AWS Lambda expects to find all code and packages.

The final stage is also based on the AWS Lambda base image. It copies both the installed dependencies and the application code from the previous stage. The EXECUTION_ENVIRONMENT environment variable is set to “lambda” so the application can detect where it’s running. Finally, the container is configured to run the handler function in main.py as the Lambda entry point.

This layout ensures the image is compatible with AWS Lambda’s container runtime while minimizing cold start latency and ensuring reproducibility.

Dockerfile for ECS

|

|

This Dockerfile builds a container image designed for running the FastAPI application on AWS ECS using the Fargate launch type. It uses the official python:3.12-slim image as the base to keep the image small and production-ready.

The uv dependency management tool is copied into the image from a prebuilt source so that it can be used to install project dependencies. This avoids needing to re-download uv and supports consistent builds.

The environment variable EXECUTION_ENVIRONMENT is set to “ecs”. This is used by the application code to return which environment it’s running in, making it easier to verify and debug deployments.

The application code is copied into the /api directory, and the working directory is set accordingly. Dependencies are installed using uv sync which reads from the existing uv.lock file to ensure a reproducible install inside a virtual environment.

Finally, the image runs the application using the fastapi run CLI (a wrapper around Uvicorn) listening on port 80. This exposes the FastAPI app in a way compatible with ECS load balancers and networking.

Terraform Provider Setup

infra/1-providers.tf:

|

|

This configuration sets up the Terraform providers used throughout the project. It starts by retrieving an authorization token for Amazon Elastic Container Registry (ECR) using the aws_ecr_authorization_token data source. This token includes temporary credentials that allow Terraform to authenticate Docker builds with AWS ECR.

The aws provider specifies the target AWS region—in this case, eu-west-1. The docker provider then uses the ECR credentials to configure registry authentication. This allows Terraform to push container images built locally (from ECS and Lambda modules) directly to ECR during provisioning.

With these providers configured, Terraform can interact with both AWS infrastructure and Docker image registries as part of a unified build and deploy workflow.

Docker Image Build with Terraform (ECS and Lambda)

Both ECS and Lambda deployments use similar Terraform configurations to build and push Docker images to AWS Elastic Container Registry (ECR). While each environment has its own Terraform module, they both leverage the same docker-build module from the AWS Lambda Terraform module collection. This ensures consistent behavior and simplifies maintenance.

Setting create_ecr_repo = true ensures that Terraform creates the repository if it doesn’t already exist, making the workflow idempotent. Once the image is built, it’s pushed to the respective ECR repository, where it becomes available to either ECS or Lambda during deployment.

infra/ecs/2-docker.tf:

|

|

infra/lambda/4-docker.tf:

|

|

Lambda Function Deployment

infra/lambda/5-lambda.tf:

|

|

This Terraform module deploys the Lambda function using a container image that was previously built and pushed to ECR. The terraform-aws-modules/lambda/aws module abstracts much of the boilerplate required for creating and configuring a Lambda function.

The image_uri parameter references the image built by the docker_build_lambda module, and package_type = "Image" tells AWS to deploy the function using that container image.

ECS Cluster & Service

infra/ecs/3-ecs.tf:

|

|

This section defines the ECS infrastructure using two main modules. First, the ecs_cluster module creates an ECS cluster that supports both FARGATE and FARGATE_SPOT capacity providers, allowing the service to balance cost and availability.

The ecs_service module sets up the actual service deployment. It runs a container built earlier, configured with specified CPU and memory limits. The service definition includes port mappings and integrates with an Application Load Balancer (ALB) by attaching to a specified target group.

Security groups are configured to allow inbound traffic from the ALB and outbound traffic to the internet.

Application Load Balancer

infra/6-alb.tf:

|

|

This module sets up the Application Load Balancer (ALB) that distributes traffic between the ECS and Lambda backends. It is deployed in a VPC using public subnets and listens on HTTP port 80.

Security groups are configured to allow HTTP access from the internet and outbound traffic to the VPC CIDR range. The listeners block defines a single HTTP listener that uses weighted target group forwarding. Traffic is evenly split between the ECS and Lambda target groups (50/50 in this example).

Two target groups are defined: one for the Lambda function and another for the ECS service. The Lambda target group uses the lambda target type and references the deployed Lambda function ARN. The ECS target group uses ip mode and expects IP addresses from ECS tasks to be registered.

Deployment

Before you begin, make sure your terminal environment has valid AWS credentials loaded. These are required for Terraform to authenticate and interact with your AWS account.

Navigate to the infra directory of the project. From there, initialize the Terraform working directory:

|

|

Next, review and apply the infrastructure plan:

|

|

Once Terraform completes, it will output the URL for the Application Load Balancer. This is your main entry point for accessing the application.

Validate that the infrastructure is deployed ok with the following command.

Replace <load-balancer-url> with the actual URL output by Terraform. You should see alternating responses like Hello from lambda and Hello from ecs, confirming that the ALB is balancing traffic between both backends.

|

|

Cleanup the resources.

|

|

Conclusion

This post demonstrated how to build and deploy a FastAPI backend that runs on both AWS Lambda and ECS Fargate, using Docker containers and Terraform. Starting from a unified application and Docker setup, we walked through how to configure Lambda- and ECS-specific Dockerfiles, define infrastructure using Terraform modules, and wire everything together behind a shared Application Load Balancer for testing.

The benefits of this approach become clear as your application matures. With Lambda, you get rapid prototyping and zero infrastructure management. With ECS, you gain more control over resources and cost optimization for steady traffic. By using container images and shared infrastructure definitions, the transition between the two is seamless.

Our test setup used weighted routing to direct traffic evenly between Lambda and ECS, confirming that both environments can serve the same application image without changes to the code. While this dual-backend approach is not intended for production use, it’s a powerful way to validate portability and plan for scaling strategies.

If you’re just starting out, Lambda provides a fast, low-maintenance option. If you’re ready to optimize and scale, ECS offers the flexibility you need. This setup lets you start with one and grow into the other—without rewriting your application or deployment logic.

Happy engineering!