Building Websites at Scale With Aws CloudFront and Hugo

Building websites has become easier than ever. Numerous platforms and third-party providers now offer tools to create and host websites within minutes, complete with custom domains, analytics, and sleek graphical interfaces.

For straightforward websites or smaller-scale projects, these platforms can be a convenient choice. However, they often fall short when it comes to flexibility, automation, and cost-effectiveness at scale. Many lack robust CLI (Command Line Interface) support for streamlining tasks, can become expensive as your needs grow, or demand significant management effort and a steep learning curve.

In this blog post, we’ll explore how to overcome these challenges by leveraging Hugo, AWS CloudFront, and GitHub Workflows to build, host, and manage websites efficiently and at scale.

Project resources can be found here.

What is Hugo?

Hugo is a powerful open-source static site generator that transforms content written in Markdown into static files such as HTML, CSS, and JavaScript. These static files are pre-rendered and served directly to users, unlike dynamic websites that generate pages in real time for each user request.

Static sites offer significant advantages—they are simpler to maintain, faster to load, and more secure. However, they aren’t the ideal solution for every use case, especially those requiring extensive interactivity or real-time updates.

Hugo powers some prominent websites, including The Kubernetes Project and Let’s Encrypt, thanks to its robust feature set, including:

- Markdown-Based Content: Writing and managing content in Markdown simplifies the process of creating and organizing websites, eliminating the need to work with complex HTML or other code.

- Open Source Framework: Hugo is free to use and backed by an active community that regularly contributes improvements and offers support.

- Theme Support: Hugo provides a wide array of free and paid themes, enabling users to quickly create professional-looking websites with minimal effort.

- Lightweight and Portable: The static files generated by Hugo can be hosted on virtually any platform, such as AWS, GitHub Pages, or a VPS, making it highly flexible and versatile.

What is AWS CloudFront?

In simple terms, CloudFront is a content delivery network (CDN) managed by AWS. A CDN is a network of servers deployed close to end users, serving as a caching layer to improve content delivery speed and reliability.

When a user requests content that is not present on the edge server, the edge server pulls the content from your backend and caches it. If the content is already present on the edge server, it is returned directly to the user without contacting your backend.

This caching mechanism improves response times, reduces the load on the backend and helps with site availability during DOS attacks.

Architecture

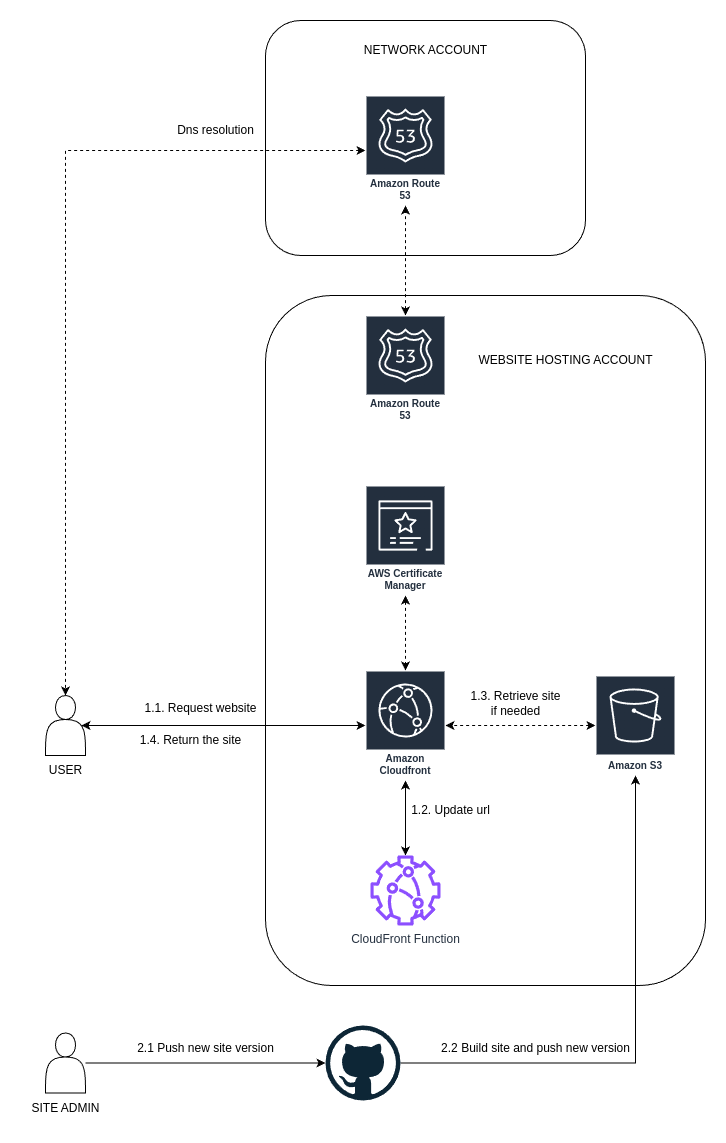

Fig 1. Single Site Architecture

Domain Name Resolution

To enhance manageability and security, the infrastructure for domain name resolution is distributed across two AWS accounts: the Network Account and the Website Hosting Account, each with distinct responsibilities.

The Network Account serves as the primary hub for managing top-level domains (TLDs).

This account is responsible for purchasing, renewing, and maintaining domains.

When a user requests DNS resolution for a website, the request initially reaches the name servers hosted in this account.

For requests targeting the www subdomain, the resolution is then routed to the name servers managed within the Website Hosting Account.

The Website Hosting Account is dedicated to hosting the infrastructure for the websites. It includes a hosted zone for the www subdomain, where DNS records point to the appropriate CloudFront distribution.

In this setup, a typical DNS resolution flow begins with the user’s request being directed to the Network Account’s name servers.

If the request involves the www subdomain, it is forwarded to the Website Hosting Account, where it resolves to the target CloudFront distribution.

Content Delivery Network

Once the website is built using Hugo, it is deployed to an S3 bucket and distributed globally using CloudFront, AWS’s content delivery network. This setup ensures fast and reliable content delivery by caching the website’s static assets closer to end users through CloudFront’s network of edge locations.

To provide a secure connection to the website, CloudFront integrates seamlessly with AWS Certificate Manager. Certificate Manager handles the issuance of SSL/TLS certificates and takes care of automatic renewal, ensuring uninterrupted secure connections without manual intervention.

Some Hugo themes utilize pretty URLs, which CloudFront may not handle correctly out of the box. While disabling this feature in Hugo can solve the issue, doing so may inadvertently break certain themes. To address this, a CloudFront function is deployed alongside the distribution. This function remaps URLs into a format recognized by CloudFront, preserving functionality and ensuring compatibility with Hugo themes.

Git Ops

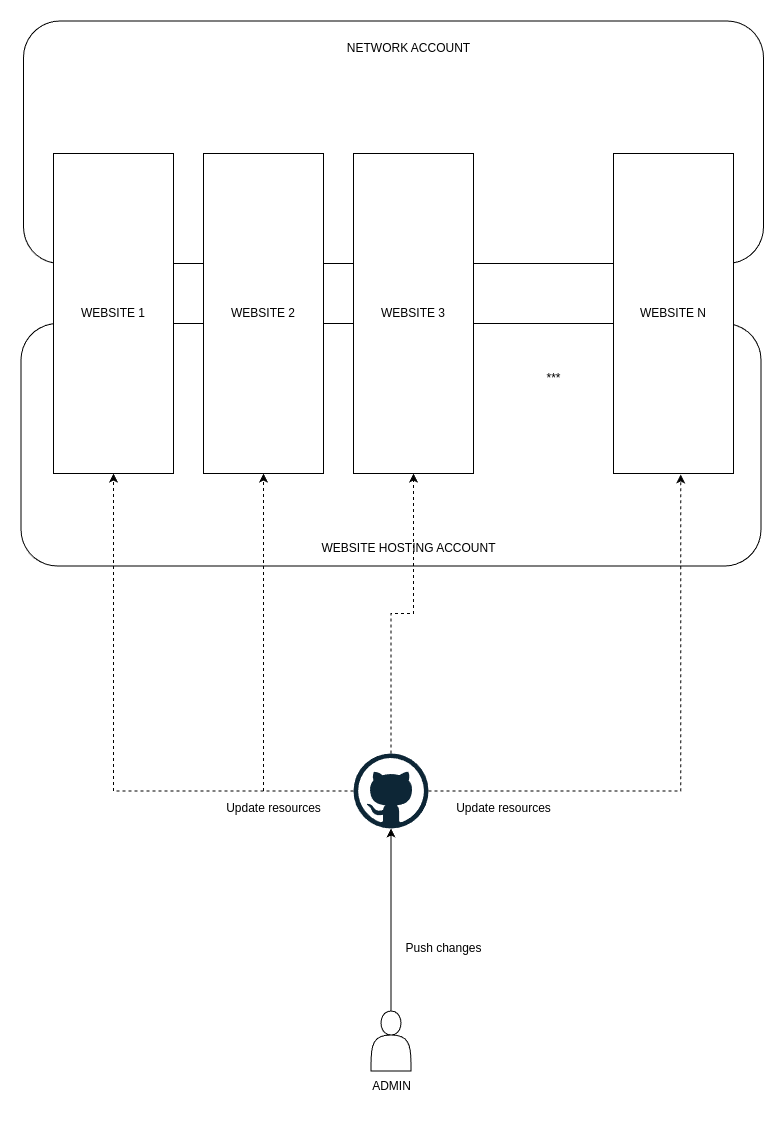

Fig 2. Deployment At Scale

One of the primary objectives of this project is to enable easy and scalable management of websites, including both infrastructure and website content, through a command-line-driven workflow.

Hugo already provides the capability to build websites locally via the CLI. However, to streamline the process further, we aim to automate deployment. All website content is stored in a GitHub repository, and deployments are triggered automatically using GitHub Workflows. These workflows, guided by tags, ensure that updates to the repository are seamlessly reflected in the corresponding S3 buckets.

The approach to structuring repositories can vary depending on your preferences. A single repository might reduce context switching, while multiple repositories can enhance modularity and maintain clear domain boundaries. Both approaches are valid, and the choice depends on your specific requirements.

In this project, we assume a structure where infrastructure resides in one repository and websites in another. This separation is particularly beneficial for large websites, which can quickly clutter a single repository.

Both repositories act as the source of truth. Any changes pushed upstream—whether additions, deletions, or updates—are automatically reflected in the infrastructure or website deployments, ensuring consistency and minimizing manual intervention.

Requirements

Before getting started, ensure you have set up GitHub-AWS integration with the necessary deployment roles in place. While this post doesn’t delve into the details, you can refer to this guide for general instructions on configuring a multi-account cloud deployment using Terraform and GitHub Actions.

We also assume that root hosted zones have already been created in the Network Account. These hosted zones are typically generated automatically when a domain is purchased through AWS. Therefore, this guide starts from the point where the hosted zones already exist, and the necessary infrastructure for the site is ready to be added.

Directory Structure

The project leverages Local Terraform Modules and uses Terragrunt to orchestrate the deployment. The directory structure of the project is as follows:

|

|

The live directory contains the configuration files for Terragrunt.

Although Terragrunt’s primary advantage is its ability to keep state files small and manage multiple stacks independently, in this project, all resources are managed within a single stack.

This decision simplifies management, as updates are only required when adding or removing websites.

The _envcommon directory houses configuration files used to manage website infrastructure.

Adding a new website is as simple as creating an entry in the configuration file, while deleting a website requires removing the corresponding entry.

The accounts.hcl file contains account details and deployment roles.

|

|

The websites.hcl file defines the configuration for websites.

Adding a new website requires specifying its details in this file.

|

|

Terraform Modules

The directory modules contains the Terraform code, structured to manage individual websites as well as collections of websites.

The single-site sub-module implements the architecture shown in Figure 1. Meanwhile, the web-hosting module acts as a wrapper that orchestrates the deployment of multiple websites based on configuration files located in the _envcommon directory.

To keep this post concise, not all files are shown here. You can view the complete code here.

Single Website

The single-site module uses tags to link websites with infrastructure.

|

|

A dedicated Route 53 hosted zone is created in the Website Hosting Account to manage the www subdomain.

It includes an A record pointing to the CloudFront distribution hosting the website:

|

|

In the Network Account, the root hosted zone is updated with the name servers from the newly created hosted zone:

|

|

To enable HTTPS traffic, a certificate is provisioned using AWS Certificate Manager with DNS validation:

|

|

The website is hosted on CloudFront with an S3 backend. The S3 bucket is configured as private with encryption, and its policy allows access only from the CloudFront distribution. CloudFront is set up with HTTPS redirection and a custom function for URL adaptation:

|

|

The CloudFront function adapts URLs to ensure compatibility with themes using pretty URLs:

|

|

Function’s code is showed below.

|

|

Scaling Websites

To deploy multiple websites, the single-site module is wrapped in the web-hosting module, which iterates through a list of site configurations.

Each website is uniquely identified by its domain name, ensuring that sub stacks are correctly managed by Terraform.

|

|

GitOps

The infrastructure deployment process is triggered automatically when changes are detected in the infrastructure directory.

The workflow assumes a role in the AWS account, which is later used to assume the deployment roles in the target account.

For a detailed guide on configuring multi-account deployment with Terraform and GitHub Actions, refer to this post.

|

|

Website Updates

If your project uses separate repositories for website content, you will need to add the infrastructure repository as a submodule within the infra-repo directory of your website repository.

Ensure that you have configured a GitHub PAT (Personal Access Token) in the repository secrets, allowing the workflow to access and pull the submodule during the checkout step.

When new content is pushed upstream, the workflow triggers automatically. It pulls the website repository along with the infrastructure submodule. Hugo is then installed, and the website is built. The workflow retrieves information about the target S3 buckets from the Terragrunt state file and syncs the updated version of the website to the corresponding S3 bucket.

|

|

Conclusion

In this blog post, we explored an effective approach to building, hosting, and managing websites at scale.

We have seen how to easily build websites using Hugo. We covered the secure hosting of static websites on AWS using the fully managed content delivery network CloudFront. Additionally, we looked at how to organize and scale hosting infrastructure with Terraform and Terragrunt. Finally, we discussed automating infrastructure management and website updates using GitHub Workflows, streamlining operations and minimizing manual effort.

Happy engineering!